Scaling Scaling: Stochastic Variational Inference for X-ray Diffraction Data

Inaugural MLCV Seminar

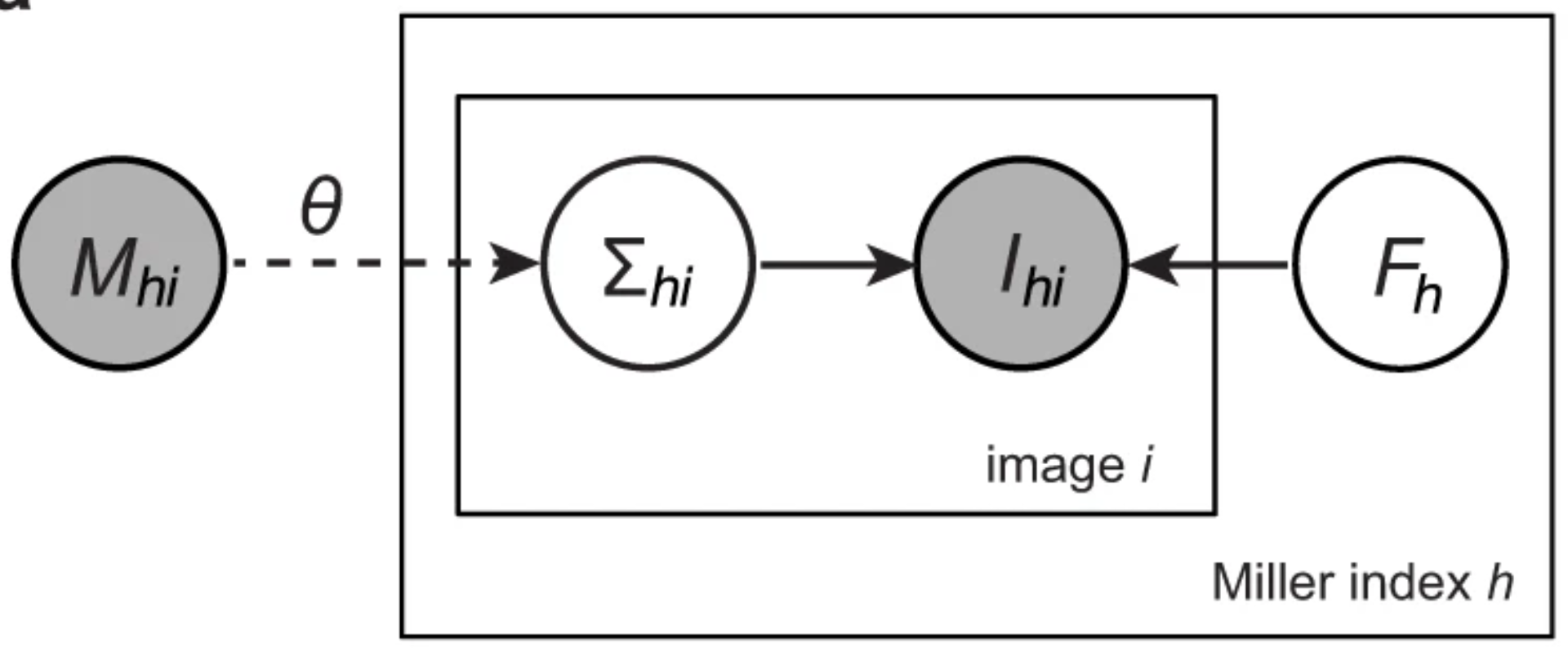

The systematic errors inherent in crystallographic data introduce a core challenge to structure determination. These errors result from numerous processes including absorption by the sample and surrounding material, polarization, and imperfections in the crystal lattice. Prior to merging redundant observations, these artifacts are typically corrected by an optimization algorithm known as scaling. Recently, we showed that the two procedures, scaling and merging, could be unified into a single algorithm based on Bayesian (variational) inference and deep learning (Dalton et al., 2022). While this algorithm compared favorably to other algorithms for a broad range of experimental designs, it was limited in applicability by its memory requirements. This was particularly prohibitive for serial crystallography wherein the number of diffraction images becomes too large to fit in memory. In this talk, I will introduce a solution to this problem. By adding a pooling mechanism to the neural scaling model from our previous work, it is possible to train the model on small batches of data. Thereby the memory requirements are determined by the batch size rather than the size of the full data set. This innovation opens the door to treating very large datasets with hundreds of thousands or millions of diffraction images.